(#140) Are we in an AI bubble 🧐? Is DataBricks worth $100 bn 💵?

🚗 Ridesharing wars; no winners…yet

Thank you for being one of the +4,500 minds reading this newsletter

Here is what you’ll find in this edition:

Are we in an AI bubble? 🧐

How DeepSeek (aka China’s OpenAI) started and why it matters

🚗 Ridesharing wars; no winners…yet

AI will disrupt all SaaS and more

Avoid the Chinese stocks, no matter how “juicy” they appear

What is Databricks really doing? And is it worth it?

…and more 👇

Onto the update:

Are we in an AI bubble? 🧐

Two things can be true. The "AI market" looks frothy, and yet the underlying platform shift is real and compounding. Even Sam Altman says we’re in a bubble, while also saying AI is the most important thing in a long time 😅

Historically, bubbles are CAPEX stories. Railways, canals, telegraph, fiber in the 1990s... too much money lays too much pipe, and we spend the next decade figuring out what to do with it. The surplus infrastructure becomes the next platform’s foundation.

Where’s the CAPEX now? Chips, sheds, and sockets: GPUs, data centers, and power. Hyperscalers have lifted capital plans dramatically (e.g., Alphabet guiding tens of billions for DCs/servers), and cloud revenue is surging alongside.

But the bottleneck is shifting from silicon to electrons. The IEA now projects data-centre electricity use to more than double by 2030. That pushes the constraint from "How many H100s can I get?" to "Where do I plug them in?": grid capacity, interconnect queues, and 24/7 clean supply.

The scarce factor (and therefore the speculative one) becomes firm, around-the-clock power near big substations.

So if this cycle rhymes with history, the overbuild won’t be fiber-in-the-ground, but it’ll be electrons-to-racks, transformers, transmission, firmed renewables, maybe nuclear PPAs (ie. power purchase agreements). The “AI bubble” narrative today is priced into chips and model startups, but the actual bubble risk may migrate to energy infrastructure financing that assumes unbounded AI load curves.

What to watch:

1/ 24/7 clean-energy PPAs at rising strike prices and longer tenors

2/ Land and interconnect premiums around high-voltage nodes

3/ Transformer and substation lead times as a new "H100"

4/ Politically driven siting and grid-upgrade packages

Net: Yes, there are bubbly bits. But if you’re pattern-matching past tech manias, the real excess (if and when it comes) likely forms around power, not GPUs. Chips are the billboard, but energy is the balance sheet. CNBC, Business Insider

How DeepSeek (aka China’s OpenAI) started and why it matters

DeepSeek’s edge wasn’t "finance", but policy

The funny thing about DeepSeek is that it didn’t start by learning balance sheets. It started by learning Beijing. A hedge fund trained a model not on factor screens or price signals, but on meeting minutes, speeches, and press releases from Chinese officials, such as: what to build, where to invest, who gets oxygen. Then it traded the implications: long here, short there.

If true, that says a lot about where alpha lives now:

1/ When the state sets the roadmap through policies, the roadmap is the model.

2/ LLMs turn vague guidance ("encourage X, constrain Y") into position sizing.

3/ Orthogonal data beats consensus data. Everyone reads earnings, but fewer models actually parse bureaucratic prose.

4/ Machines don’t wait for analysts to translate speeches into decks.

5/ If industrial policy is the benchmark, the index is being rewritten in real time.

The broader point: LLMs are a universal compiler for intent. In markets where policy is a leading indicator, the best "quant" might be the model that reads the Politburo better than you read a 10-K.

The text that matters isn’t the filing, but the memo. LINK

Ridesharing wars; no winners…yet

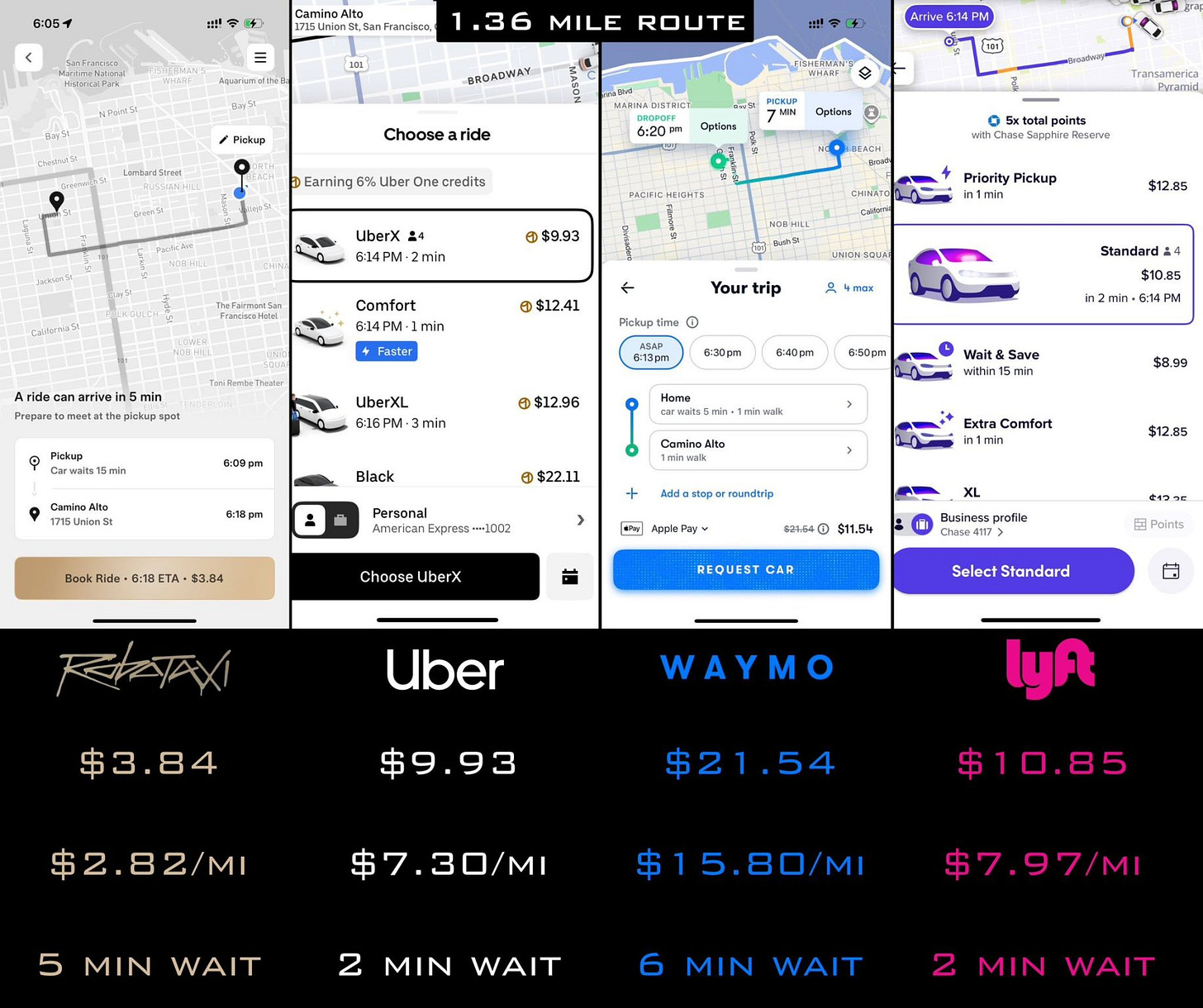

You know, the whole idea with ridesharing was supposed to be that you tap a button and a car appears, like magic. And for a while, that worked until it didn’t. Now we’ve got a new flavor of the same button, except instead of summoning an Uber or Bolt, you might accidentally end up hailing a robot that costs five times more and takes longer to show up.

Want to feel nostalgic for the early 2010s? Take the UberX for $9.93 and get there in 2 minutes. Want to feel like you’re living in the future and paying for the privilege? Hop in a Waymo for $21.54, and enjoy your 6-minute wait for the self-driving car that may or may not understand one-way streets.

But the best part is coming from something called "Robotaxi", which will get you there for less than a third of what Lyft charges for the same distance. Which raises the real question: are we disrupting transportation or just rediscovering the bus with worse unit economics? The market has decided that software eats everything except apparently, margins.

Concluding, this is a beautiful little chart of capitalist inefficiency: four companies, four price points, four ideas of "value", and one brutal truth: no one is winning this race, especially not your wallet.

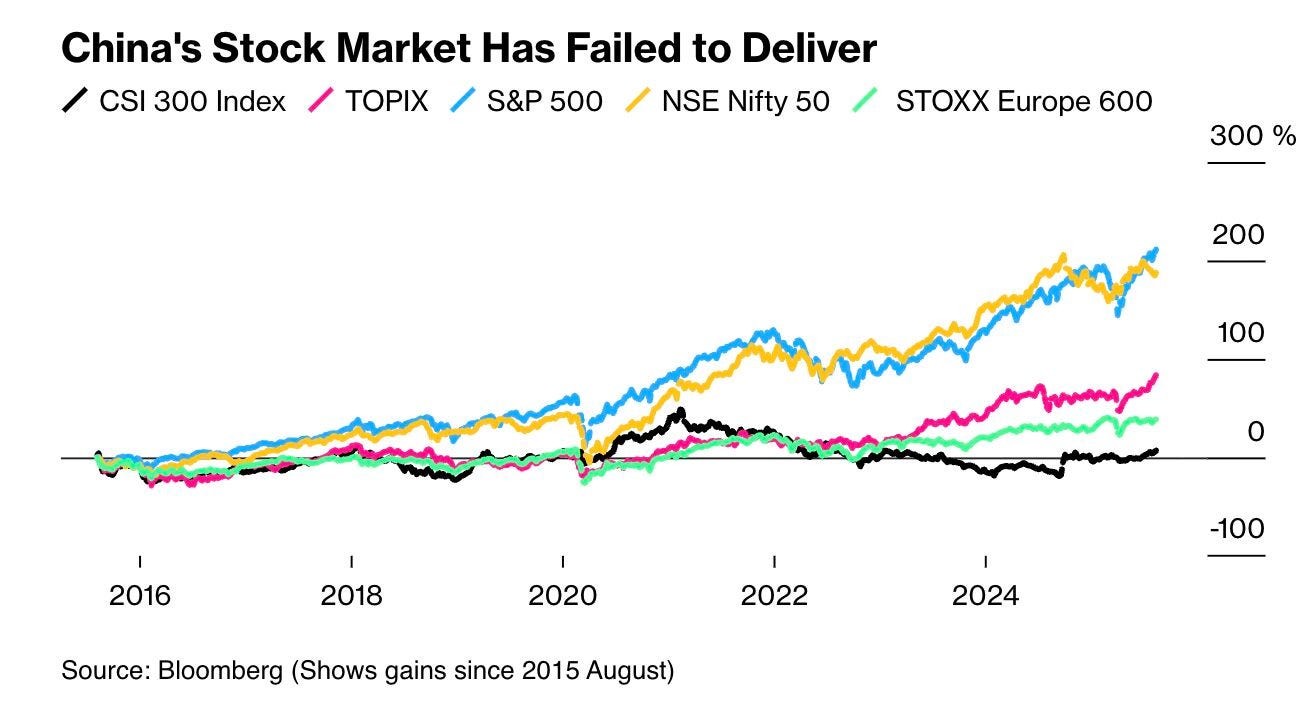

Avoid the Chinese stocks, no matter how “juicy” they appear

China’s stock market is basically a very expensive theme park where the rides all break down just as you get on them. The CSI 300 has spent a decade going sideways while the S&P 500 tripled and India quietly sprinted past.

This feels like buying a lottery ticket where the grand prize is…a refund. Structurally, it was never designed to make investors rich because its job was to funnel household savings into state projects and keep factories humming.

Today it’s a $11 trillion market, but one that behaves less like a wealth engine and more like a piggy bank for the Party. Investors get the privilege of subsidizing industrial policy, IPO fraud, and endless "reforms" that never seem to reform much. LINK

AI will disrupt all SaaS and more

We’ve spent 30 years stacking abstractions: clouds on data centers, APIs on APIs, SaaS on clouds. The tower works because most of it is hidden.

Now, AI arrives as a probabilistic interface for messy systems. It doesn’t replace the stack, but it routes around brittle edges and makes old workflows addressable by natural language.

// Short term: wrappers and copilots, ie. RPA with reasoning. They sit on top, create quick ROI, and expose the weird seams of legacy software.

// Medium term: some middle layers get automated. Glue code, integrations, support, and documentation shift from human-maintained to model-generated. New failure modes appear (nondeterminism, drift).

// Value capture compresses to three places: chips & cloud (scale), model platforms (distribution & defaults), and vertical apps (workflow ownership). Everything else risks commoditization.

// Regulation and reliability move from optional to product features: provenance, audit trails, data governance, evaluation.

The interesting question is "Which bricks become cheap, and who owns the new chokepoints?".

The slingshot matters because it’s cheap to try. Small experiments at the edge can rewire where the profit pools sit.

If the stack stays up, we call it leverage; if it falls down, we call it "unexpected model behavior". Either way, the birds are already mid-air.

What is Databricks really doing? And is it worth it?

1/ Databricks is the “plumbing that became strategy”. It sells the platform that lets enterprises corral disparate data into one place (the lakehouse), clean and govern it, and then point analytics and AI at it. In practice, that means data scientists and engineers use Databricks to wrangle huge datasets and build models; Adidas mining millions of reviews for product feedback is the canonical example. More recently, the company has leaned into the AI platform narrative: partnerships with Palantir and SAP to fuse data estates, and a push to build databases aimed at AI agents (i.e., machine users instead of human dashboards). That shifts the story from “faster Spark” to “the control plane for AI-era data”, which is a much bigger ambition.

2/ So why $100bn? Two things: momentum and position. Momentum is the market: Databricks is reportedly closing a round at a $100bn valuation, which is up ~61% from December 2024, against a backdrop of investors scrambling for late-stage AI exposure post-Figma and Palantir. If AI is the new application layer, then whoever owns the data/feature store, governance, and workload orchestration owns the switching costs. The company says it’s pouring new capital into product and the AI talent war (nearly 9,000 employees, with thousands more planned) and is happy to delay an IPO, because private investors will fund the “default substrate for enterprise AI” thesis a bit longer. Even management is saying the quiet part out loud: they think there’s a path to a trillion-dollar outcome, if they execute.

3/ Is $100 bn too much? If Databricks becomes the de facto system of intelligence that sits across SAP/Palantir/your data warehouse and powers agentic workflows, the number won’t look crazy. Platforms that standardize developer behavior and enterprise governance compound for years. If, instead, hyperscalers rebundle the stack, open-source keeps eroding the moat, or CIOs default to incumbent suites, this looks rich. Today’s round is a bet that AI spend pulls data gravity toward a neutral control plane, not deeper into cloud-specific silos. That’s the core: you’re underwriting category dominance, not a point product. On that reading, $100 bn is aggressive but coherent so long as Databricks keeps turning “pipes” into policy, workflow, and lock-in faster than everyone else. LINK

PRINCIPLE: on luck

on Luck 🍀

Aaaah yes, luck. That mysterious force that apparently shows up out of nowhere and lands on your desk like any delivery package.

Except – well, no.

The people who say “you were just lucky” usually weren’t there at 11:47 p.m. when you were rewriting the thing for the fifth time. Or when you shipped the version that didn’t work. Or when you pitched the idea and got ghosted. Again.

Luck is real, sure. But it’s extremely picky. It prefers hanging out with people who are already sweating. People who show up. People who hit “publish” even when no one’s watching.

So yes, maybe you got lucky.

But it’s weird how luck only seems to visit the ones who’ve been grinding in the dark for years.

Funny how that works.