(#146) How 🍏 Apple got disrupted by a toy in 5 steps

OpenAI is the new Microsoft

Thank you for being one of the +4,500 minds reading this newsletter

Here is what you’ll find in this edition:

How Apple got disrupted by a toy in 5 steps

[Essay] The AI Bubble will build the grid

OpenAI is the new Microsoft

AI will commoditize education

🚜 Caterpillar is a cyclical stock and, apparently, connected to many businesses

…and more 👇

Onto the update:

How Apple got disrupted by a toy in 5 steps

The missed platform

Apple’s strategic blindness to large language models (LLMs) is starting to look eerily familiar. In Apple’s Lost Decade, I argued that Cupertino “optimized for the wrong variable: beauty over capability”. That same logic applies today. Apple dismissed LLMs as “just chatbots”, much as Sony once dismissed the iPod as “just a music player”. But when a “toy” scales to 800 million weekly users, it stops being a product because it becomes a platform. ChatGPT has crossed that threshold. What Apple saw as a UX gimmick, the rest of the world turned into an operating system for cognition.

The disruption pattern

In my Toy Story essay, I described how great firms get disrupted not by superior technology but by “seemingly insignificant innovations that open new distribution channels”. That’s exactly what happened here. LLMs didn’t enter through Apple’s moat of hardware and ecosystem because they bypassed it. They live in the browser, in APIs, and soon in agents. The chatbot interface (which was mocked as trivial) was actually a Trojan horse for a new computing model: text as the universal UI. Apple’s fixation on polished design made it blind to rough-edged breakthroughs.

The aggregation shift

Apple once aggregated attention through devices. OpenAI now aggregates intent through dialogue. When you type into ChatGPT, you’re choosing an outcome. The interface collapses the App Store model itself. 800 million people want something done. And LLMs, unlike iPhones, learn from every request, which means that the feedback loop compounds.

The platform inversion

This inversion makes Apple’s traditional leverage (control over the OS) strategically weaker. If ChatGPT becomes the layer between users and applications, Apple’s beautifully designed homescreens become just windows into someone else’s intelligence stack. In the same way Microsoft once lost the browser war to Google’s search box, Apple risks losing the interface war to the chat box. Ironically, the company that made computing intimate is now watching another firm make intelligence personal.

The next lost decade

Of course, Apple still sells two billion devices. But its moat ie. (integration) is losing relevance in a world where cognition itself is the platform. The lesson echoes in my closing line in Toy Story: “Disruption doesn’t announce itself as a threat; it arrives as a toy”. Apple laughed at the chatbot. Now that toy speaks every language, codes every app, and serves 800 million users a week. If the first lost decade was about missing cloud software, the next may be about missing artificial minds. TechCrunch

[Essay] The AI Bubble will build the grid

GPUs are the tulips of our time: scarce, beautiful, and rapidly depreciating (ie. 1 – 3 years). They’re not durable infrastructure, but they’re the speculative steel beams of a half-finished cathedral. Data centers last longer, but even those will look old/outdated once the next wave of compute efficiency hits. The real prize, the one that outlasts every crash, will be power. (read the full text HERE)

OpenAI is the new Microsoft

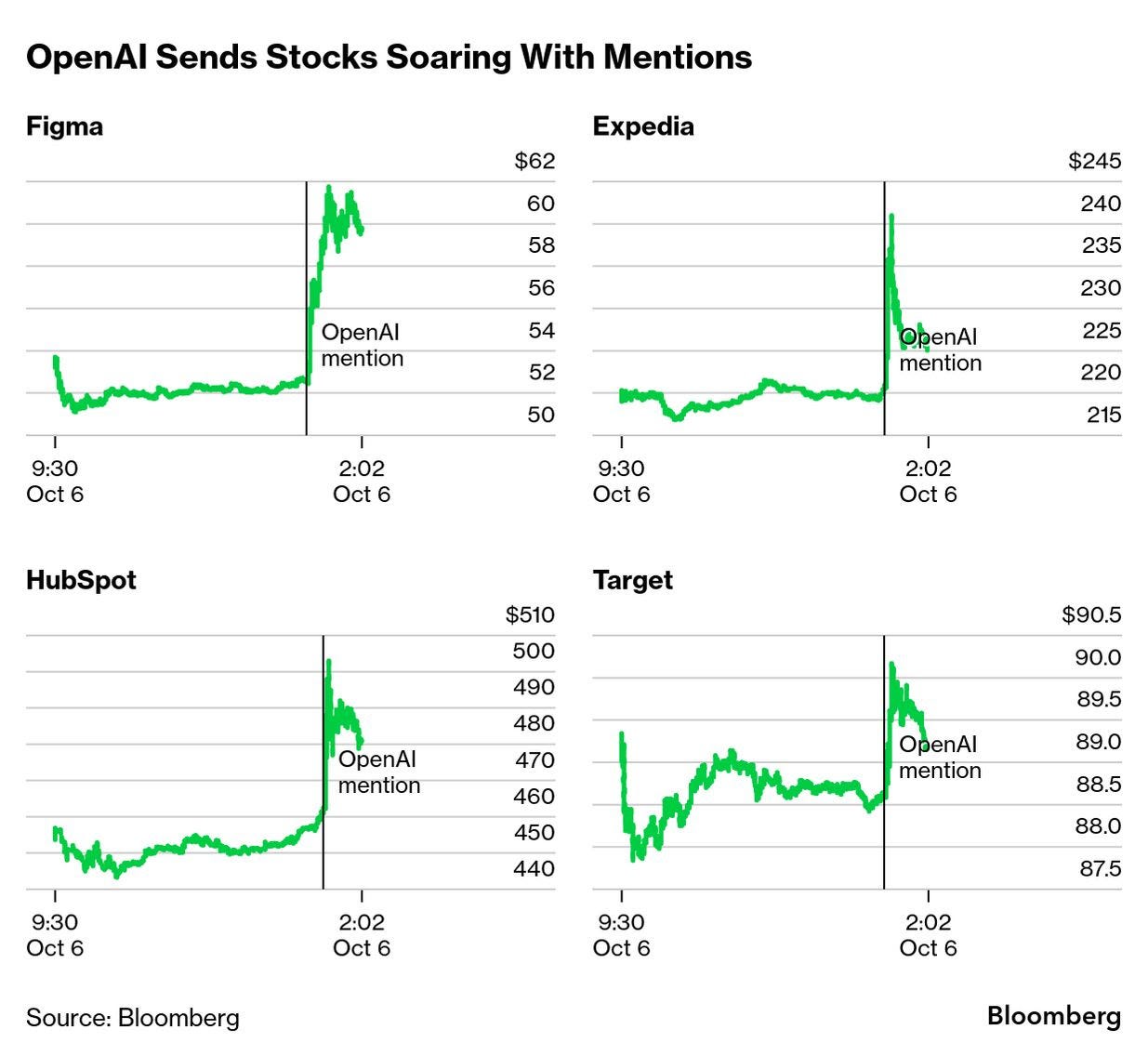

OpenAI has effectively become the Jerome Powell of earnings calls: one offhand mention, and stocks like Figma, HubSpot, Expedia, and Target (!) go vertical. Doesn’t matter if the deal is vague, cash-flow negative, or entirely symbolic. Say the words “we’re partnering with OpenAI”, and you get an instant repricing of your company’s future like it just discovered gravity. Altman sneezes and adds $4B to your market cap. It’s not even multiple expansion, it’s multiple invention.

The AMD and Oracle moves were big, but those at least came with some revenue guidance and real infrastructure scale. What we’re seeing now is the AI version of the dot-com press release pump, where you add “.com” to your name and watch the stock skyrocket 20%. Except this time, it’s not Pets.com, it’s OpenAI, and instead of pets, it’s plugins.

OpenAI literally has no skin in this game because it is not public (!). It’s just tossing out integrations and watching the crowd bid up whatever company catches one. Crazy times we are living!

AI will commoditize education

Harvard Business School uses AI to evaluate students’ work: “When students prepare a spreadsheet or do some other work, we are able to give them very rapid feedback using AI tools,” says dean Srikant Datar.

HBS teaches competitive advantage, but may be giving away its own by outsourcing intellectual rigor to a fine-tuned summarizer. We’re not in the disruption phase. We’re in the commoditization phase. LINK

🚜 Caterpillar is a cyclical stock and, apparently, connected to many businesses

It’s always fascinating when a company that’s basically a proxy for GDP suddenly gets re-rated as a proxy for the future. Caterpillar has lived its entire public life as the most cyclical of cyclicals. They are basically earthmovers for economic expansions and suffer pain trades for recessions. You bought it when construction was about to boom and sold it when the music stopped. Simple, dirty, analog. But then something weird happened: cloud computing gave Caterpillar its first AI-driven narrative. Not from automation, robotics, or data analysis.... but from turbines. The gear that makes electricity. The boring stuff that powers the sexy stuff.

Now investors are starting to model Caterpillar not as a macro play, but as picks-and-shovels for the GPU gold rush. Datacenters don’t just need NVIDIA chips, they need energy....lots of it, consistently, and often independently sourced. Caterpillar, sitting on a quiet turbine business, suddenly looks like it has optionality on the AI boom. When Oracle says it needs more cloud capacity, Caterpillar stock rallies for nine straight sessions.

It’s a classic case of narrative arbitrage. The same machines, but a new story that links them to the exponential curve. In the end, every infrastructure company becomes a tech company, once tech runs out of power. Bloomberg

Working “996” is back

Apparently, the cool new thing in Silicon Valley is to work 9 AM to 9 PM, six days a week. They call it “996” which sounds like an urgency number but is actually just a cheerful euphemism for voluntary burnout. You get up, you code, you grind, you maybe microdose (hello, Silicon Valley!), and you tweet about how you’re “so grateful to be building with the smartest people I know”. It’s a vibe. A 72-hour-a-week vibe.

Now imagine explaining this to someone in Europe. Like, imagine a German civil servant or a French startup founder hearing that a 24-year-old in Palo Alto is working +60 hours a week on purpose, and not because of a staffing shortage or a war. You tell them people in San Francisco are working double shifts because they’re optimizing LLM latency in multi-modal agents, and their brain just short-circuits. In France, 35 hours a week is a social contract. In California, it’s a part-time job with no equity.

It’s not even clear who’s in control anymore. Elon says “hardcore”, VCs post screenshots of Stripe receipts on Saturdays, and every Gen Z product manager is like, “bro if I sleep I lose edge”. Silicon Valley didn’t import 996 from China; it invented it.

No wonder that most of the new stuff gets invented today in the US and China. LINK

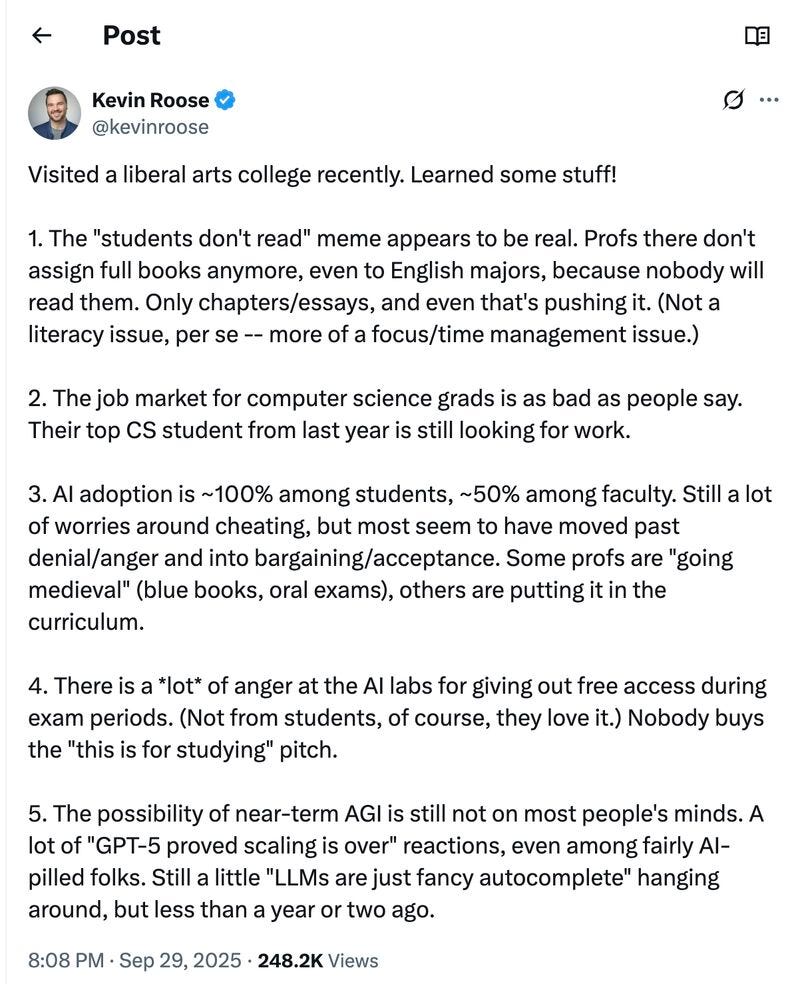

Comments on the use of AI on campus

The assumptions behind the education-industrial complex are challenged all at once.

1/ Pedagogy is facing opportunity cost - Students won’t read books because Tiktok exists. Professors know this, so they optimize for survival: assign shorter texts, lower friction.

2/ Credentials aren’t mapping to demand - The CS job market isn’t weak because the skills are useless, but because we’re between paradigms. Most grads are trained for yesterday’s software world, while hiring has shifted to infra, models, and agents. This change is about context.

3/ AI is the new Excel - That students are using AI at 100% penetration isn’t a shock. What’s interesting is the 50% adoption rate among faculty. That’s not resistance, it’s the first sign of institutional adaptation. Just like powerpoint crept into academia in the ‘90s, LLMs are becoming infrastructure, not disruption.

This is a slow-motion API migration of the university, from narrative-based knowledge transfer to agent-mediated reasoning environments. Education will still happen. It just won’t look like syllabi and seminar rooms anymore.

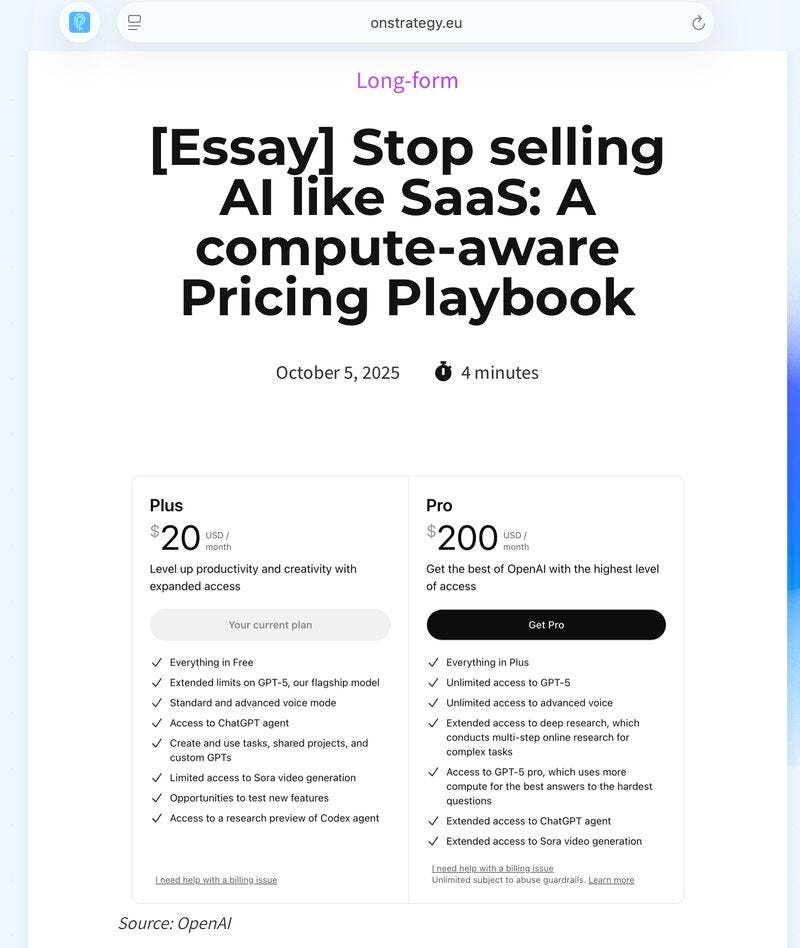

[Essay] 💰 on AI pricing

Everyone says we’re in an AI bubble. Bubbles are expectations outrunning cash flows, while cash flows are pricing wearing an accounting badge. You can’t repeal physics or cut H100 list prices, but you can decide whether to sell GPU time like Slack seats. Price AI like SaaS and you subsidize infinite curiosity. Price it like a utility and heavy users fund their own combustion. The job now is obvious: make pricing compute-aware.

Price the burn, not the hype, and you get a business that survives the bubble, whether it pops or not. Read full text HERE.

Principle: Taking a decision has two ‘stops’

Every decision has two legs:

Analyze the data. Build the model, argue with yourself in comments, ask for feedback, produce a beautiful memo that you read the next day, etc.

Decide. Hit “buy/sell/approve/ship”.

Analysis without a decision is accrual accounting for courage: impressive, but nobody got paid.

A decision without analysis is amateur work. Instead, do this:

Write the decision rule before the debate.

Weight opinions by believability (track record + logic).

Decide by the deadline, not by consensus.

Log the decision, expected results, and reversal triggers.

Afterward, compare outcomes to expectations, where pain + reflection = progress.

Do this repeatedly, and you build a decision-making machine that improves itself. LINK